Abstract

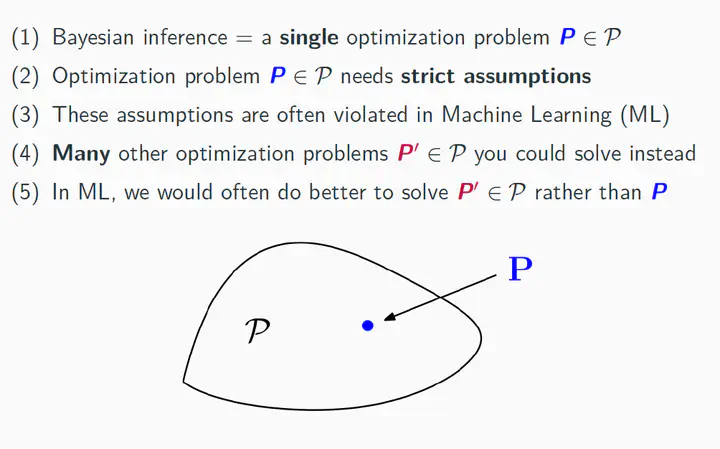

In this talk, I summarize a recent line of research and advocate for an optimization-centric generalisation of Bayesian inference. The main thrust of this argument relies on identifying the tension between the assumptions motivating the Bayesian posterior and the realities of modern large-scale Bayesian Machine Learning. Our generalisation is a useful conceptual device, but also has methodological merit: it can address various challenges that arise when the standard Bayesian paradigm is deployed in a Machine Learning context—including robustness to model misspecification, robustness to poorly chosen priors, or inference in intractable likelihood models.

Date

Mar 19, 2021 2:00 PM

Event

Research talks at various research organisations, including at Warwick University, Imperial College, Nottingham University, Leeds University, Facebook Research Core Data Science London, The Alan Turing Institute …